Introduction

Over the past few years, AI has evolved at an unprecedented pace, rapidly shifting from hype-filled experimentation to enterprise adoption. Now, we are witnessing a critical convergence. A moment where AI frameworks, tooling, and methodologies are beginning to standardise, enabling broader adoption beyond early adopters.

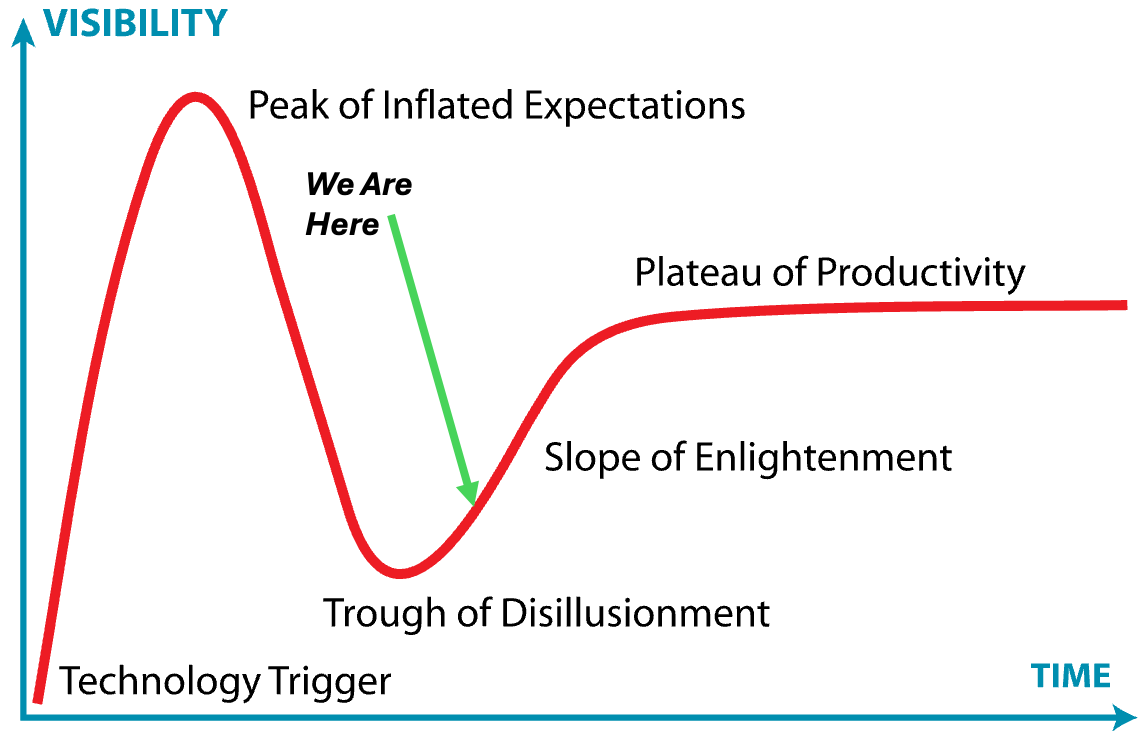

This convergence signals a shift in AI’s adoption curve. If we consider Rogers’ Diffusion of Innovation model, we are moving beyond the Innovators phase and into the Early Adopters phase. Similarly, in the Gartner Hype Cycle, AI is starting to climb out of the Trough of Disillusionment and onto the Slope of Enlightenment—a stage where real business value begins to emerge from practical, scalable implementations.

It’s fascinating to watch this unfold in real time. A year ago it felt like every AI discussion was just an extension of the generative AI hype. With most discussions ending with a prevailing message of wanting to “do AI” but unsure how to actually drive value from it. Now the conversations are shifting. AI frameworks are stabilising and repeatable patterns are emerging, enabling AI at scale to become a realistic option for many companies.

What’s driving this convergence?

For me, a few key elements stand out:

- The Interoperability Shift

- Compound AI Systems

Multi-Provider AI Agents - The Interoperability Shift

One of the biggest challenges in enterprise AI adoption has been the inability to generate tangible return on investment from AI initiatives, especially any ROI that scaled with the number of initiatives. Many organisations have struggled to move beyond proof-of-concepts (PoC), resulting in what can best be described as PoC purgatory.

A symptom of broader issues within an enterprise such as incorrect use case selection, lack of AI adoption strategy, and a lack of reusable components or patterns to reduce cost of outcome.

Interoperability is defined by Cambridge; “the ability to work together with other systems or pieces of equipment”.

In the context of AI, interoperability extends this, and in my opinion, would be defined as:

“The ability of multiple AI models, agents, and services to communicate, collaborate and orchestrate tasks across different platforms, providers, and ecosystems.”

Instead of being locked into a single framework or proprietary AI system, businesses can now leverage the strengths of multiple providers, models, and frameworks seamlessly.

This shift means AI no longer operates within isolated use case-based silos. It can instead function as a flexible, composable, building-block-like ecosystem where the appropriate tools, agents, etc. are orchestrated dynamically.

My view is that interoperability has been the missing piece for true enterprise AI adoption. We’ve seen powerful AI models emerge, and even effective use cases with a real ROI, but each one was largely confined within its own ecosystem. The ability to mix and match agents, frameworks, and tooling as required without having to redesign architectures will be a game-changer for AI scalability.

How?

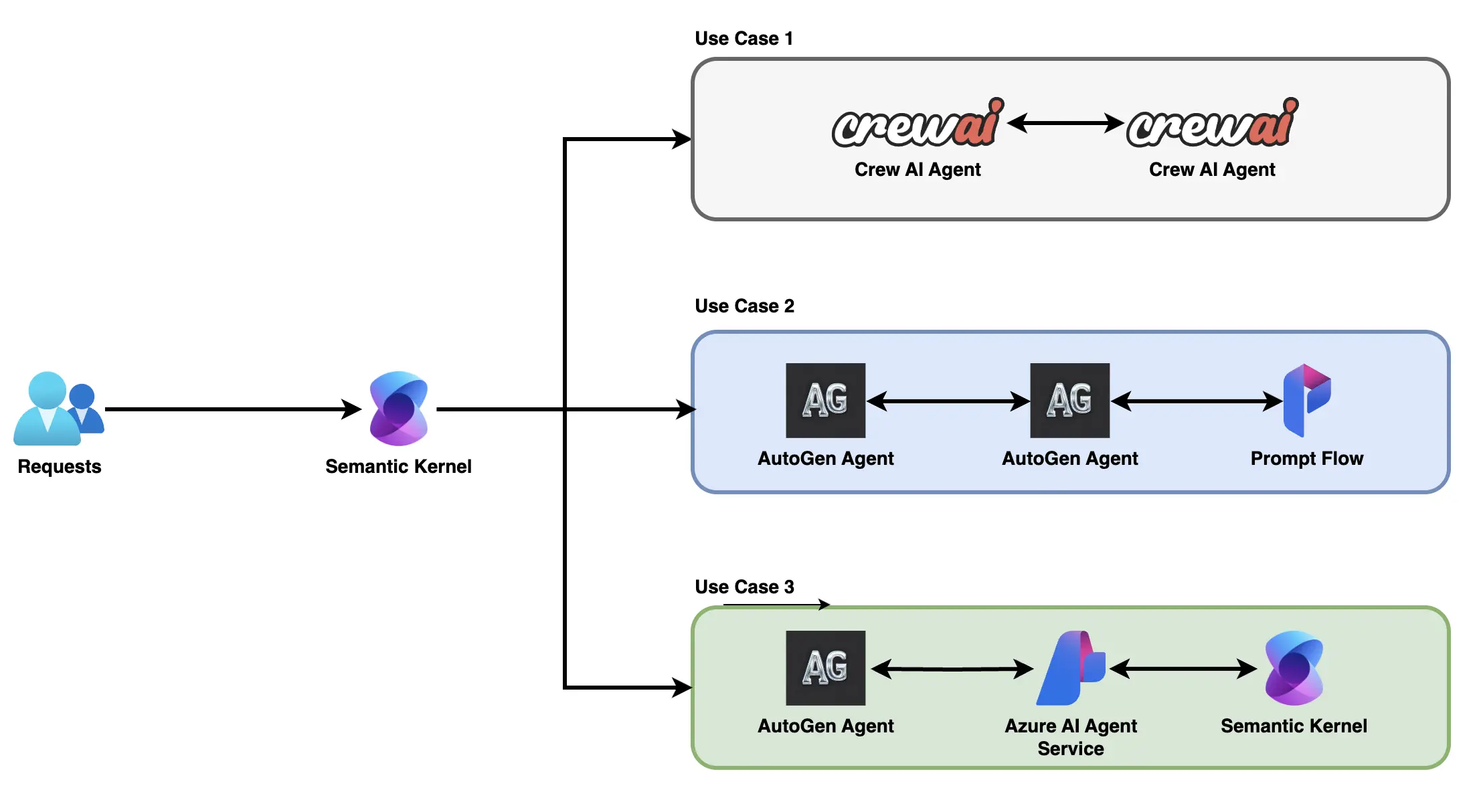

To achieve true interoperability, AI frameworks need to evolve, and they are starting to. In Part 1, I discussed Semantic Kernel’s Agent Framework RC1, which enables multi-provider AI agent support. This, paired with the ability to leverage the Model Context Protocol within Semantic Kernel for tools across agents, is a powerful combination.

This advancement shifts AI from isolated, single-agent systems to composable, modular AI architectures that can be dynamically orchestrated across different use cases.

The illustration above highlights an example of a multi-use case compound AI system, where multiple frameworks, agents, and tools are orchestrated through Semantic Kernel, enabling the interoperability mentioned above.

Now we can provide a single point of entry (or multiple) for many AI use cases or teams of agents operating to achieve common goals. Whilst simple, you can quickly see how a hierarchical approach to teams of teams of agents could be managed, interacted with, and governed from a single point, especially when used in conjunction with an API-driven AI Landing Zone.

This structured approach to AI orchestration and development unlocks scalability, operational consistency, and governance, unleashing AI as a true enterprise system component to drive business value rather than a siloed feature.

Microservices to Compound AI Systems

The second of the three key elements contributing to this convergence is the evolution of agentic architecture. Those familiar may have noticed similar trends between the latest AI agentic architectures resembling that of a microservice-like architecture pattern. Whilst these systems do follow some of the same principles, they are much more than a simple rebranded version of microservices.

Microservices

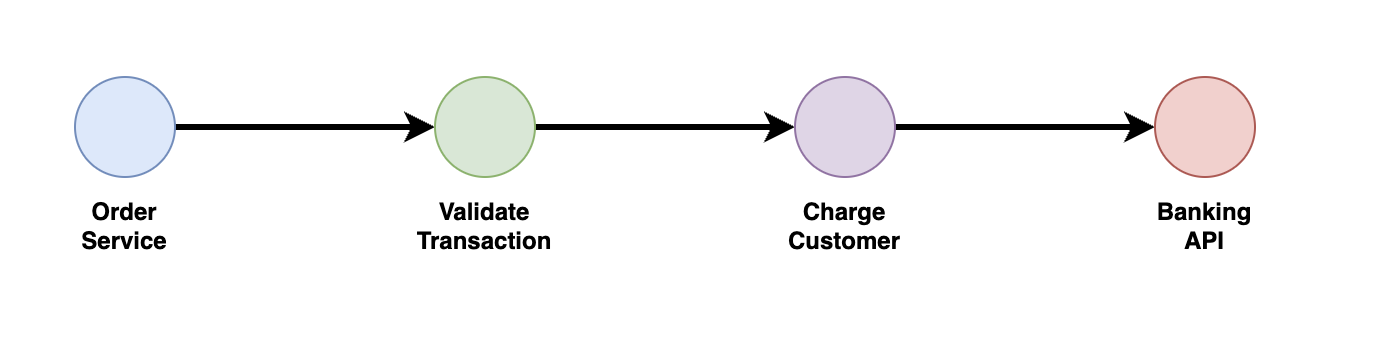

Many of us are familiar with microservices architectures, but for those who aren’t, microservices refer to an architecture where an application is composed of small, independent services, each performing a specific function and communicating via APIs (usually).

Microservices allow applications to be:

- Scalable - Individual services can be scaled independently.

- Modular - Each service is built, deployed, and updated separately.

- Resilient - A failure in one service does not necessarily bring down the entire system.

However, microservices are inherently static. Each service follows a predefined role and workflow within the system.

As an example, see above: take a payment processing microservice within an e-commerce platform. It is responsible for validating transactions, charging a customer account, and handling refunds. It always interacts with the order service to confirm purchases and the banking API to complete transactions. Regardless of external factors, this microservice will always perform the same set of functions in the same workflow. This ensures consistency, but in the dynamic world of agentic AI, a slightly different approach is required.

Compound AI Systems

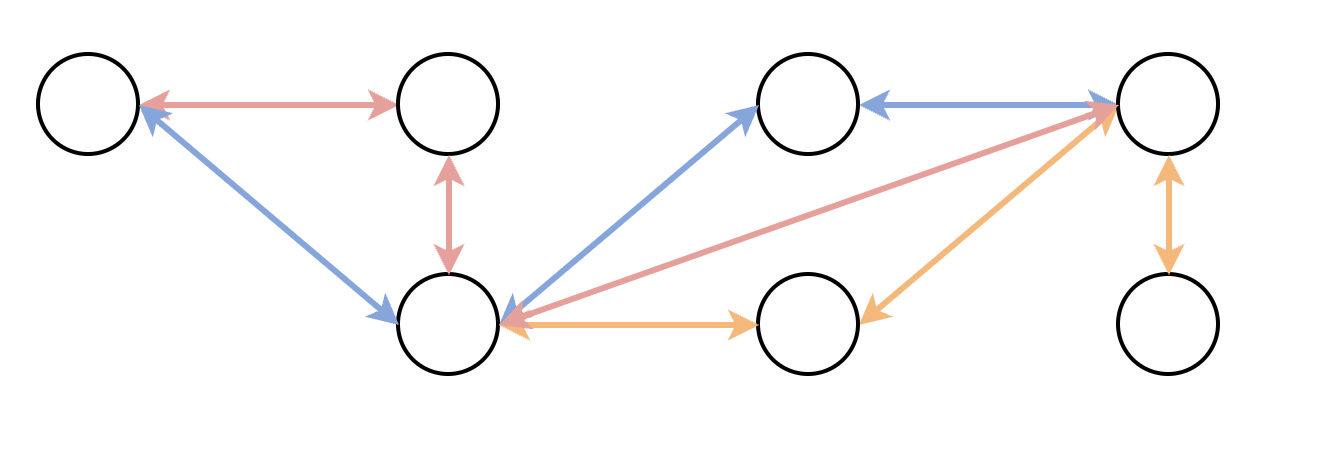

Compound AI Systems, a term coined by BAIR, extend the principles of microservices by introducing AI-driven autonomy and adaptability. Instead of static service interactions, agentic AI components dynamically collaborate, reason, and adjust their behaviour and actions in real-time.

Compound AI Systems allow applications to be:

- Self-Orchestrating - Agents determine workflows based on real-time data and context.

- Collaborative - Multi-agent cooperation enabling flexible outcomes.

- Contextually Aware - Adjust behaviours based on external conditions and learned experience.

As an example, see above: the dynamic nature of the collaboration in a Compound AI System. As each transaction or task is completed, it has the potential to change direction and leverage other components within its ecosystem to achieve the desired outcome each time. It is this ability to break away from static interactions and instead dynamically adapt to real-world complexity based on the problems presented that is key to the convergence of AI within the enterprise landscape.

Final Thoughts

The convergence of AI through interoperability and Compound AI Systems signals, to me, that we are moving beyond experimentation and into the beginnings of the Slope of Enlightenment, where AI starts to deliver measurable business impact.

However, navigating this transition requires more than just adopting the latest AI tools. It demands a fundamental shift in mindset—rethinking how AI is integrated, breaking free from PoC purgatory, and ensuring holistic business buy-in.

- Leverage interoperability to break free from one-off platforms and siloed use cases.

- Adopt agentic architecture based on Compound AI Systems that dynamically adapt and orchestrate based on needs.

- Identify the correct AI use cases, balancing technical feasibility with real business value.

The future of AI isn’t about hype - it’s about delivering real, transformative value.